Why Reliable AI Needs Data Agents, Not Better Prompts

Read time: 4 min | Published in: arXiv, 2025

When Language Becomes a Failure Mode

Large language models appear capable of reasoning, planning, and answering complex questions. Yet a subtle fragility sits at the center of their behavior.

Ask a model a question and it responds correctly. Ask the same question with slightly different wording and the answer changes or collapses entirely. The underlying information need remains identical, but the system behaves as if it were not.

This phenomenon, known as prompt sensitivity, is not a usability quirk. It is a systems level reliability problem. When language becomes the primary interface for execution, small linguistic variations can silently determine success or failure.

For autonomous AI systems, this is unacceptable.

Measuring Prompt Sensitivity at Scale

The research introduces Prompt Sensitivity Prediction, a task focused on determining whether a language model will successfully answer a prompt before the prompt is executed.

To study this, the authors construct PromptSET, a benchmark composed of thousands of prompts paired with near-identical variations. Each variation preserves the same information need but alters surface form.

The results are stark. Even when prompts are highly similar in meaning, models frequently fail on some versions while succeeding on others. Existing approaches such as text classification, query difficulty estimation, and even LLM self evaluation struggle to predict these failures reliably.

The conclusion is clear. Prompt quality alone cannot guarantee correctness.

The Deeper Issue: Unstructured Execution

Prompt sensitivity exposes a deeper design flaw in many AI systems.

Prompts are treated as unstructured strings. They are generated, modified, chained, and executed without any guarantees about stability or observability. When a failure occurs, there is no systematic way to understand why.

This is manageable when humans are in the loop. It becomes dangerous when agents operate autonomously, generate their own prompts, and act on the outputs.

Reliability cannot be enforced at the prompt level. It must be enforced at the system level.

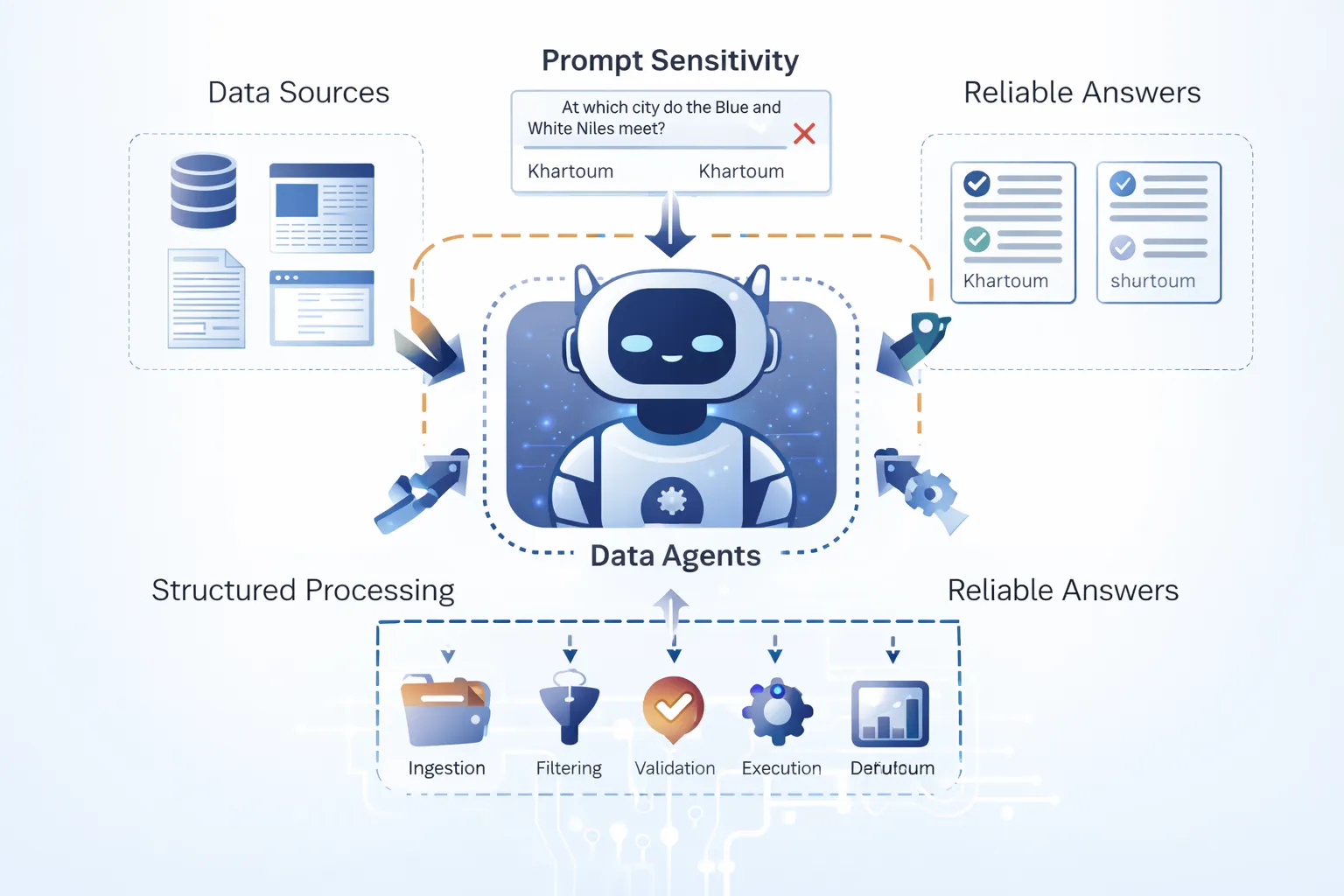

Data Agents as a Reliability Layer

This is where data agents become essential.

A data agent is not a clever prompt template. It is a structured execution unit that wraps language models inside deterministic pipelines.

At a minimum, a data agent:

- Ingests data from explicit sources

- Applies defined preprocessing and filtering steps

- Interacts with models using constrained schemas

- Validates outputs against expectations

- Produces structured signals rather than free-form text

In this architecture, prompts are no longer the interface. They are an internal mechanism.

Designing for Prompt Sensitivity, Not Against It

PromptSET shows that prompt sensitivity is inevitable. Data agents are designed with this assumption, not in denial of it.

Instead of trusting a single formulation, agents can:

- Normalize an information need into a structured representation

- Generate multiple controlled prompt realizations

- Execute them independently

- Measure consistency across responses

- Detect instability when answers diverge

Prompt sensitivity becomes a measurable signal rather than an invisible failure.

A Concrete Example

Consider an agent tasked with answering factual questions from public sources.

A prompt-only system issues one query and returns the result.

A data agent informed by prompt sensitivity research behaves differently:

- The question is represented as a structured intent

- Multiple prompt variants are generated from that intent

- Responses are compared for agreement

- If instability is detected, the agent retries, abstains, or flags uncertainty

The agent optimizes for reliability, not eloquence.

From Research Insight to System Design

The key contribution of prompt sensitivity research is not better prompt engineering advice. It is a constraint on how AI systems must be built.

Language models are powerful but brittle. When deployed inside unstructured pipelines, their failures are silent and unpredictable. When embedded inside data agents, their behavior becomes observable, testable, and controllable.

This shift from prompts to agents marks a transition from experimentation to infrastructure.

Why This Matters

As AI systems move toward autonomy, the cost of silent failure increases. Systems must know not only how to answer, but when not to trust themselves.

Prompt sensitivity research makes one thing clear. Reliable AI will not emerge from better wording. It will emerge from better abstractions.

Data agents are one such abstraction.

Contact: contact@heisenberg.network