Teaching AI Agents to Distinguish Signal from Noise: The Graph Search Story

Read time: 3 min | Published in: IEEE TKDE, 2022

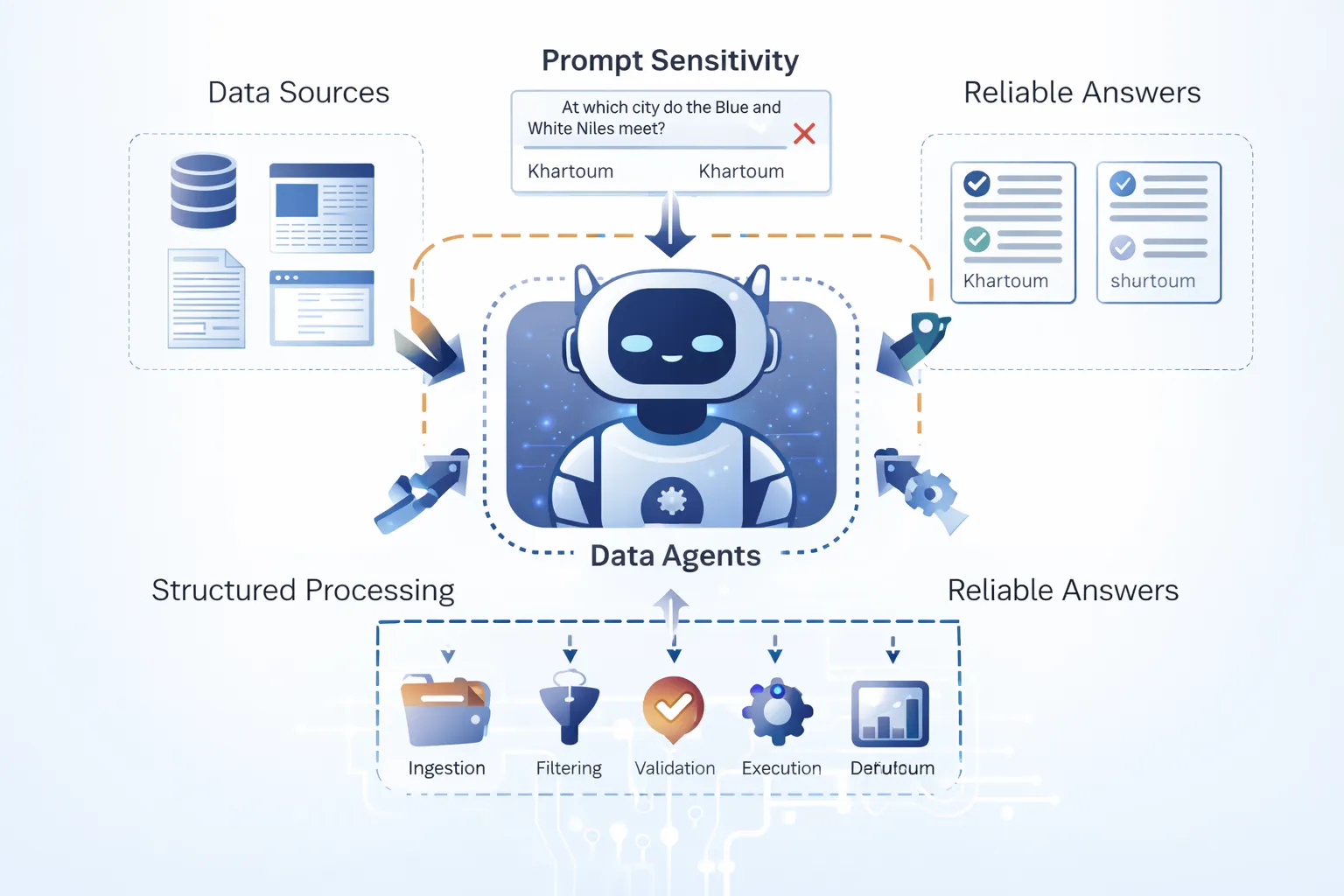

The Credibility Problem

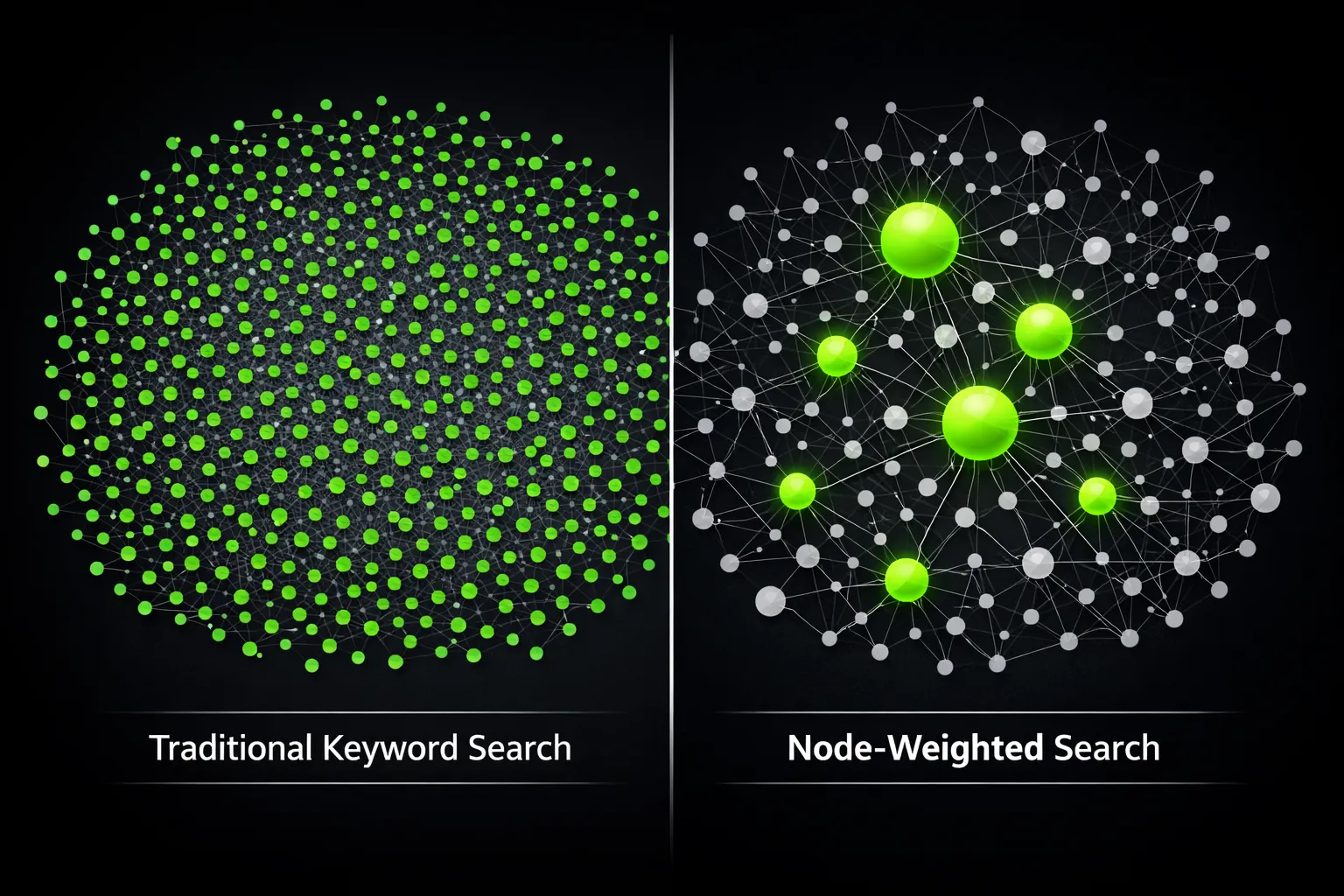

When AI agents query Twitter for developer sentiment, they get 50,000+ tweets. When they search LinkedIn for "blockchain engineers," they find 100,000+ profiles. Traditional keyword search treats all sources equally—but a tweet from a core protocol developer carries fundamentally different value than random speculation.

The core issue: Prior graph search algorithms optimize for structural proximity (shortest paths between keywords) while ignoring node-level attributes like authority, credibility, or expertise. Keyword matching identifies potential sources, but provides no mechanism to assess information quality.

Our Contribution: Optimizing for Source Importance

We introduced node cost as a first-class optimization objective in graph keyword search, posing three problems:

- Edge Distance (baseline): Minimize path lengths between keywords—the standard approach

- Node Cost (our innovation): Minimize node importance scores along paths—directly optimizing for credibility

- Combined Objective (our innovation): Joint optimization via tunable parameter λ

Theoretical Results

Complexity: Proved node cost and combined optimization are NP-hard (reduction from 3-SAT)

Approximation: Developed polynomial-time algorithms with factor-2 approximation guarantee—our solution is provably at most 2× worse than optimal

Key innovation: Transform node weights into edge weights, allowing Dijkstra's algorithm to incorporate importance while maintaining polynomial complexity and preserving approximation bounds

Experimental Validation

We validated across four real-world datasets:

Expert Team Formation (DBLP: 200K researchers, 615K collaborations)

Result: Teams from node cost optimization published in 76% higher-ranked venues than edge-only methods

Enterprise Databases (TPC-E benchmark)

Result: Our relationships appeared 34% more frequently in actual transactions

Protein Networks (70K proteins, 425K interactions)

Result: Identified proteins with 76% higher expression levels—critical for drug target viability

User Study (IMDb: 1M nodes, 3M edges)

Result: Node-weighted methods achieved 15-20% higher relevance scores (p < 0.05)

Implications for AI Systems

This research addresses a critical challenge: as AI agents become autonomous, they need algorithmic credibility assessment—not human-in-the-loop evaluation.

Key insights:

- Generalizability: Node weight framework applies across domains—h-index for researchers, expression levels for proteins, table importance for databases

- Tractability: Despite NP-hardness, approximation algorithms with provable bounds enable practical deployment

- Theoretical rigor: Factor-2 guarantees provide formal bounds on algorithm behavior, supporting explainability requirements

Applicable Domains

These techniques apply wherever graph data includes node importance:

- Social platforms: ranking by author authority

- Professional networks: candidate search by expertise

- Content systems: creator credibility in recommendations

- Market intelligence: data source reliability assessment

Contact: contact@heisenberg.network